# 部署场景

以下步骤都是在root账户下测试的

# 1、单机+数据持久化

# 准备机器

准备1台机器,用于运行sudis程序。

IP为:192.168.1.200。

# 安装

在192.168.1.200上安装sudis程序,详细步骤参考快速安装 (opens new window)。

[root@192-168-1-200 ~]# ./sudis-3.0.0-472ecdc.x86_64.bin

Verifying archive integrity... 100% All good.

Uncompressing sudis 100%

Location: /opt/sudis

Install sudis finish.

# 1.1 启动sudis服务

在192.168.1.200上启动sudis服务。

[root@192-168-1-200 ~]# systemctl start sudis_ds

查看状态。

[root@192-168-1-200 sudis]# systemctl status sudis_ds

● sudis_ds.service - sudis_ds Service

Loaded: loaded (/usr/lib/systemd/system/sudis_ds.service; linked; vendor preset: disabled)

Active: active (running) since Mon 2024-04-22 15:03:23 CST; 20s ago

Main PID: 98988 (sudis_ds)

CGroup: /system.slice/sudis_ds.service

└─98988 /opt/sudis/bin/sudis_ds -c /opt/sudis/config.yaml

Apr 22 15:03:23 192-168-1-200 systemd[1]: Started sudis_ds Service.

配置文件为/opt/sudis/config.yaml,数据持久化配置选项在Persistence部分,默认开启数据持久化。

Persistence:

# The directory path for storing database backup files.

BackupDirectory: "/tmp/sudis"

# Whether the database restores backup files upon startup. Default is true.

RestoreBackupAtStartup: true

# Whether the database performs a full backup upon exiting. Default is true.

BackupAtExit: true

# 1.2 客户端连接

[root@192-168-1-200 sudis]# redis-cli -p 9999

127.0.0.1:9999> set k1 10

OK

# 1.3 停止

[root@192-168-1-200 sudis]# systemctl stop sudis_ds

查看持久化文件。

[root@192-168-1-200 ~]# ls -l /tmp/sudis/

total 4

-rw-r--r-- 1 root root 14 Apr 22 15:06 full_backup_0.pb

# 1.4 重启

[root@192-168-1-200 sudis]# systemctl start sudis_ds

验证持久化。

[root@192-168-1-200 ~]# redis-cli -p 9999

127.0.0.1:9999> get k1

"10"

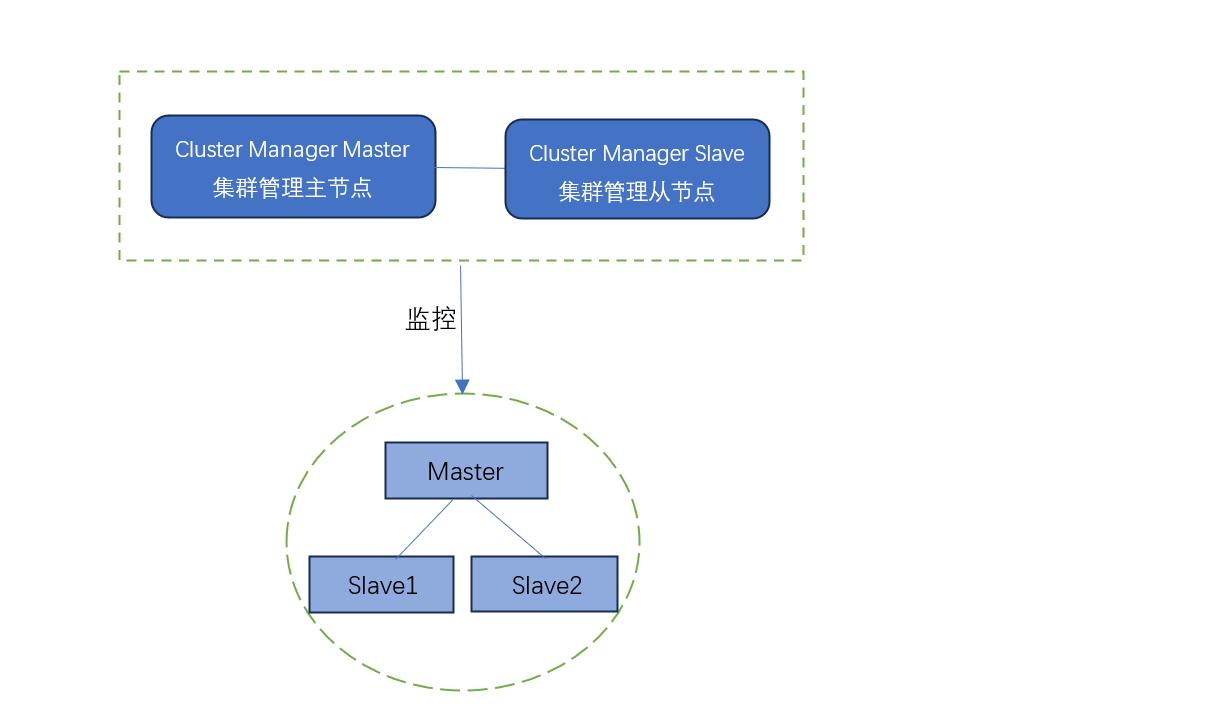

# 2、哨兵模式(1主3从)

# 准备机器

准备4台机器,用于运行sudis主从程序。

IP为:192.168.1.200、192.168.1.201、192.168.1.202、192.168.1.203。

准备2台机器,用于运行manager主从程序。

IP为:192.168.1.204、192.168.1.205。

# 2.1 安装

在192.168.1.200 - 192.168.1.205上安装sudis程序,详细步骤参考快速安装 (opens new window)。

[root@192-168-1-200 ~]# ./sudis-3.0.0-472ecdc.x86_64.bin

Verifying archive integrity... 100% All good.

Uncompressing sudis 100%

Location: /opt/sudis

Install sudis finish.

# 2.2 启动agent服务

提示:

多台主机时每台主机都需要启动agent服务

在192.168.1.200 - 192.168.1.205上分别启动agent服务。

[root@192-168-1-200 ~]# systemctl start sudis_agent

查看agent服务是否启动成功

[root@192-168-1-200 ~]# systemctl status sudis_agent

● sudis_agent.service - sudis_agent Service

Loaded: loaded (/usr/lib/systemd/system/sudis_agent.service; linked; vendor preset: disabled)

Active: active (running) since Fri 2024-04-19 13:48:33 CST; 7s ago

Main PID: 5564 (sudis_agent)

CGroup: /system.slice/sudis_agent.service

└─5564 /opt/sudis/bin/sudis_agent -c /opt/sudis/agent/agent_config.yaml

Apr 19 13:48:33 192-168-1-200 systemd[1]: Started sudis_agent Service.

# 2.3 创建manager服务

参数解释:

- 其中192.168.1.204:16667:16384

- 192.168.1.204:为主机ip地址

- 16667:为manager启动端口号

- 16384:为agent端口号

在192.168.1.204上执行下列命令,在192.168.1.204上创建主manager,在192.168.1.205上创建从manager。

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm agent createmanager --master 192.168.1.204:16667:16384 --slave 192.168.1.205:16668:16384

meta:

id: 11

code: 0

msg: Success

在192.168.1.204上查看主从manager信息。

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm manager listmanagers

meta:

id: 20

code: 0

msg: Success

id: 0

port: 16667

agent_port: 16384

ip_addr: 192.168.1.204

role: HOST_ROLE_MANAGER_MASTER

state: 2

cfg_path: /opt/sudis/cms/1/manager_config.yaml

peer_port: 16668

peer_ip_addr: 192.168.1.205

在192.168.1.204上查看主manager进程。

[root@192-168-1-204 ~]# ps -ef | grep sudis

root 1897 1 0 14:03 ? 00:00:00 /opt/sudis/bin/sudis_agent -c /opt/sudis/agent/agent_config.yaml

root 1931 1897 0 14:05 ? 00:00:00 sudis_cms -r master -a 192.168.1.205 -p 16668 -l 16667 -i 16384 -s 192.168.1.204 -d /opt/sudis/cms/1

root 1968 1470 0 14:09 pts/0 00:00:00 grep --color=auto sudis

在192.168.1.205上查看从manager进程。

[root@192-168-1-205 ~]# ps -ef | grep sudis

root 1868 1 0 14:04 ? 00:00:00 /opt/sudis/bin/sudis_agent -c /opt/sudis/agent/agent_config.yaml

root 1889 1868 0 14:05 ? 00:00:00 sudis_cms -r slave -a 192.168.1.204 -p 16667 -l 16668 -i 16384 -s 192.168.1.205 -d /opt/sudis/cms/1

root 1910 1628 0 14:10 pts/0 00:00:00 grep --color=auto sudis

# 2.4 创建哨兵服务

在192.168.1.204上执行下列命令,创建哨兵模式

参数解释: --type 1表示哨兵模式,如果不指定,默认为--type 0,为集群模式 --name sentineltest 表示集群的名字 --masternum 1 表示主节点的数量为1 --replicas 3 表示每个主节点有3个从节点 --hosts 按顺序列出4个节点的IP和端口

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm manager --addr 127.0.0.1:16667 createcluster --type 1 --name sentineltest --masternum 1 --replicas 3 --hosts 192.168.1.200:16384:10001 192.168.1.201:16384:10002 192.168.1.202:16384:10003 192.168.1.203:16384:10004

meta:

id: 1

code: 0

msg: Success

cluster_id: 1

查看哨兵创建信息

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm manager --addr 127.0.0.1:16667 listclusters

meta:

id: 6

code: 0

msg: Success

identitys:

- name: sentineltest

cluster_id: 1

查看哨兵模式详细信息。

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm manager showcluster --cluster_id 1

meta:

id: 2

code: 0

msg: Success

state: 1

config:

type: CLUSTER_TYPE_SENTINEL

name: sentineltest

master_num: 1

replica: 3

db_usage:

keys: 0

data_size: 0

hosts_details:

- host:

id: 1

port: 10001

ip_addr: 192.168.1.200

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_INIT

slots_str: "[0,16383]"

master_id: 0

db_usage:

keys: 0

data_size: 0

- host:

id: 2

port: 10002

ip_addr: 192.168.1.201

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 3

port: 10003

ip_addr: 192.168.1.202

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 4

port: 10004

ip_addr: 192.168.1.203

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

# 2.5 启动哨兵模式

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm manager startcluster --cluster_id 1

meta:

id: 15

code: 0

msg: Success

查看集群详细信息。

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm manager showcluster --cluster_id 1

meta:

id: 2

code: 0

msg: Success

state: 4

config:

type: CLUSTER_TYPE_SENTINEL

name: sentineltest

master_num: 1

replica: 3

db_usage:

keys: 1

data_size: 4

hosts_details:

- host:

id: 1

port: 10001

ip_addr: 192.168.1.200

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_OK

slots_str: "[0,16383]"

master_id: 0

db_usage:

keys: 1

data_size: 4

- host:

id: 2

port: 10002

ip_addr: 192.168.1.201

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_OK

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 3

port: 10003

ip_addr: 192.168.1.202

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_OK

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 4

port: 10004

ip_addr: 192.168.1.203

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_OK

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

# 2.6 客户端连接测试命令

注:

26667 为哨兵的服务端口,默认为manager的端口数加10000, 本例manager端口是16667,哨兵的端口为26667.

[root@192-168-1-200 ~]# redis-cli -h 192.168.1.200 -p 10001

192.168.1.200:10001> set k1 10

OK

192.168.1.200:10001> set k2 10

OK

[root@192-168-1-200 ~]# redis-cli -h 192.168.1.204 -p 26667

192.168.1.204:26667> sentinel masters

1) 1) "name"

2) "sentineltest"

3) "ip"

4) "192.168.1.200"

5) "port"

6) "10001"

7) "runid"

8) "1"

9) "flags"

10) "master"

11) "num-slaves"

12) "3"

13) "num-other-sentinels"

14) "1"

# 2.7 停止服务

停止cluster。

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm manager stopcluster --cluster_id 1

meta:

id: 16

code: 0

msg: Success

查看状态。

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm manager showcluster --cluster_id 1

meta:

id: 2

code: 0

msg: Success

state: 8

config:

type: CLUSTER_TYPE_SENTINEL

name: sentineltest

master_num: 1

replica: 3

db_usage:

keys: 2

data_size: 8

hosts_details:

- host:

id: 1

port: 10001

ip_addr: 192.168.1.200

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_INIT

slots_str: "[0,16383]"

master_id: 0

db_usage:

keys: 2

data_size: 8

- host:

id: 2

port: 10002

ip_addr: 192.168.1.201

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 3

port: 10003

ip_addr: 192.168.1.202

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 4

port: 10004

ip_addr: 192.168.1.203

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

停止manager

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm agent stopmanager --addr 192.168.1.204:16384:16667

meta:

id: 18

code: 0

msg: success

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm agent stopmanager --addr 192.168.1.205:16384:16668

meta:

id: 18

code: 0

msg: success

在192.168.1.200 - 192.168.1.205 上停止agent。

[root@192-168-1-200 ~]# systemctl stop sudis_agent

# 2.8 重启

在192.168.1.200 - 192.168.1.205上启动agent。

[root@192-168-1-200 ~]# systemctl start sudis_agent

在192.168.1.204上启动manager。

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm agent startmanager --addrs 192.168.1.204:16384 --dirid 1

meta:

id: 18

code: 0

msg: Success

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm agent startmanager --addrs 192.168.1.205:16384 --dirid 1

meta:

id: 18

code: 0

msg: Success

或者执行下列等价的命令。

[root@192-168-1-204 ~]# /opt/sudis/bin/sudis_adm agent startmanager --addrs 192.168.1.204:16384 192.168.1.205:16384

meta:

id: 18

code: 0

msg: Success

重新启动哨兵模式。

[root@192-168-1-204 cms]# /opt/sudis/bin/sudis_adm manager startcluster --cluster_id 1

meta:

id: 15

code: 0

msg: Success

清理

清理manager配置

rm -rf /opt/sudis/cms

清理sudis程序包

rm -rf /opt/sudis /tmp/sudis

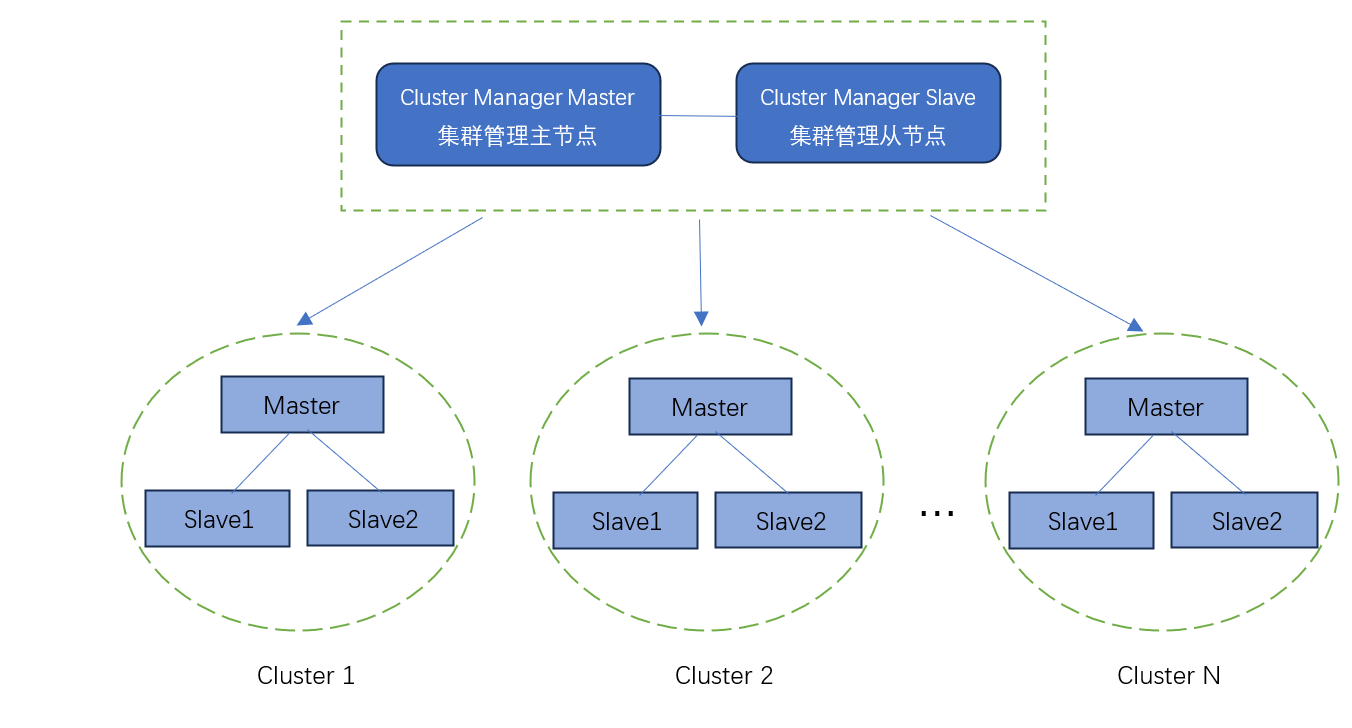

# 3、集群模式(3主6从)

# 准备机器

准备9台机器,用于运行sudis主从程序,3个主节点,每个主节点有2个从节点。

IP为:192.168.1.200、192.168.1.201、192.168.1.202、192.168.1.203、192.168.1.204、192.168.1.205、192.168.1.206、192.168.1.207、192.168.1.208。

其中的192.168.1.207、192.168.1.208 也用于运行manager主从程序。

# 3.1 安装

在192.168.1.200 - 192.168.1.208上,安装sudis程序,详细步骤参考快速安装 (opens new window)。

[root@192-168-1-200 ~]# ./sudis-3.0.0-472ecdc.x86_64.bin

Verifying archive integrity... 100% All good.

Uncompressing sudis 100%

Location: /opt/sudis

Install sudis finish.

# 3.2 启动agent服务

在192.168.1.200 - 192.168.1.208上,启动agent服务。

[root@192-168-1-200 ~]# systemctl start sudis_agent

查看状态

[root@192-168-1-200 ~]# systemctl status sudis_agent

● sudis_agent.service - sudis_agent Service

Loaded: loaded (/usr/lib/systemd/system/sudis_agent.service; linked; vendor preset: disabled)

Active: active (running) since Mon 2024-04-22 09:27:22 CST; 10s ago

Main PID: 5611 (sudis_agent)

CGroup: /system.slice/sudis_agent.service

└─5611 /opt/sudis/bin/sudis_agent -c /opt/sudis/agent/agent_config...

Apr 22 09:27:22 192-168-1-200 systemd[1]: Started sudis_agent Service.

# 3.3 创建manager服务

在192.168.1.207上执行下列命令,在192.168.1.207上创建主manager,在192.168.1.208上创建从manager。

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm agent createmanager --master 192.168.1.207:16667:16384 --slave 192.168.1.208:16668:16384

meta:

id: 11

code: 0

msg: Success

在192.168.1.207上查看主从manager信息。

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm manager listmanagers

meta:

id: 20

code: 0

msg: Success

id: 0

port: 16667

agent_port: 16384

ip_addr: 192.168.1.207

role: HOST_ROLE_MANAGER_MASTER

state: 2

cfg_path: /opt/sudis/cms/1/manager_config.yaml

peer_port: 16668

peer_ip_addr: 192.168.1.208

在192.168.1.207上查看主manager进程。

[root@192-168-1-207 ~]# ps -ef | grep sudis

root 1832 1 0 09:30 ? 00:00:00 /opt/sudis/bin/sudis_agent -c /opt/sudis/agent/agent_config.yaml

root 1866 1832 0 09:33 ? 00:00:00 sudis_cms -r master -a 192.168.1.208 -p 16668 -l 16667 -i 16384 -s 192.168.1.207 -d /opt/sudis/cms/1

root 1900 1402 0 09:37 pts/0 00:00:00 grep --color=auto sudis

在192.168.1.208上查看从manager进程。

[root@192-168-1-208 ~]# ps -ef | grep sudis

root 1828 1 0 09:30 ? 00:00:00 /opt/sudis/bin/sudis_agent -c /opt/sudis/agent/agent_config.yaml

root 1851 1828 0 09:33 ? 00:00:00 sudis_cms -r slave -a 192.168.1.207 -p 16667 -l 16668 -i 16384 -s 192.168.1.208 -d /opt/sudis/cms/1

root 1873 1632 0 09:38 pts/0 00:00:00 grep --color=auto sudis

# 3.4 创建3主6从集群

参数解释: --addr 127.0.0.1:16667 表示manager 的地址和端口 --name clustertest 表示集群的名字 --masternum 3 表示主节点的数量为3 --replicas 2 表示每个主节点有2个从节点 --hosts 按顺序列出9个节点的IP和端口

在192.168.1.207上执行下列命令,创建集群。

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm manager --addr 127.0.0.1:16667 createcluster --name clustertest --masternum 3 --replicas 2 --hosts 192.168.1.200:16384:10001 192.168.1.201:16384:10002 192.168.1.202:16384:10003 192.168.1.203:16384:10004 192.168.1.204:16384:10005 192.168.1.205:16384:10006 192.168.1.206:16384:10007 192.168.1.207:16384:10008 192.168.1.208:16384:10009

meta:

id: 1

code: 0

msg: Success

cluster_id: 1

查看集群创建信息

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm manager --addr 127.0.0.1:16667 listclusters

meta:

id: 6

code: 0

msg: Success

identitys:

- name: clustertest

cluster_id: 1

查看集群详细信息。

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm manager showcluster --cluster_id 1

meta:

id: 2

code: 0

msg: Success

state: 1

config:

type: CLUSTER_TYPE_CLUSTER

name: clustertest

master_num: 3

replica: 2

db_usage:

keys: 0

data_size: 0

hosts_details:

- host:

id: 1

port: 10001

ip_addr: 192.168.1.200

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_INIT

slots_str: "[0,5460]"

master_id: 0

db_usage:

keys: 0

data_size: 0

- host:

id: 2

port: 10002

ip_addr: 192.168.1.201

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 3

port: 10003

ip_addr: 192.168.1.202

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 4

port: 10004

ip_addr: 192.168.1.203

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_INIT

slots_str: "[5461,10921]"

master_id: 0

db_usage:

keys: 0

data_size: 0

- host:

id: 5

port: 10005

ip_addr: 192.168.1.204

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 4

db_usage:

keys: 0

data_size: 0

- host:

id: 6

port: 10006

ip_addr: 192.168.1.205

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 4

db_usage:

keys: 0

data_size: 0

- host:

id: 7

port: 10007

ip_addr: 192.168.1.206

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_INIT

slots_str: "[10922,16383]"

master_id: 0

db_usage:

keys: 0

data_size: 0

- host:

id: 8

port: 10008

ip_addr: 192.168.1.207

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 7

db_usage:

keys: 0

data_size: 0

- host:

id: 9

port: 10009

ip_addr: 192.168.1.208

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 7

db_usage:

keys: 0

data_size: 0

# 3.5 启动集群

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm manager startcluster --cluster_id 1

meta:

id: 15

code: 0

msg: Success

查看集群详细信息

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm manager showcluster --cluster_id 1

meta:

id: 2

code: 0

msg: Success

state: 4

config:

type: CLUSTER_TYPE_CLUSTER

name: clustertest

master_num: 3

replica: 2

db_usage:

keys: 4

data_size: 16

hosts_details:

- host:

id: 1

port: 10001

ip_addr: 192.168.1.200

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_OK

slots_str: "[0,5460]"

master_id: 0

db_usage:

keys: 2

data_size: 8

- host:

id: 2

port: 10002

ip_addr: 192.168.1.201

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_OK

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 3

port: 10003

ip_addr: 192.168.1.202

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_OK

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 4

port: 10004

ip_addr: 192.168.1.203

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_OK

slots_str: "[5461,10921]"

master_id: 0

db_usage:

keys: 2

data_size: 8

- host:

id: 5

port: 10005

ip_addr: 192.168.1.204

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_OK

slots_str: ""

master_id: 4

db_usage:

keys: 0

data_size: 0

- host:

id: 6

port: 10006

ip_addr: 192.168.1.205

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_OK

slots_str: ""

master_id: 4

db_usage:

keys: 0

data_size: 0

- host:

id: 7

port: 10007

ip_addr: 192.168.1.206

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_OK

slots_str: "[10922,16383]"

master_id: 0

db_usage:

keys: 0

data_size: 0

- host:

id: 8

port: 10008

ip_addr: 192.168.1.207

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_OK

slots_str: ""

master_id: 7

db_usage:

keys: 0

data_size: 0

- host:

id: 9

port: 10009

ip_addr: 192.168.1.208

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_OK

slots_str: ""

master_id: 7

db_usage:

keys: 0

data_size: 0

# 3.6 客户端连接测试命令

[root@192-168-1-200 ~]# redis-cli -c -h 192.168.1.200 -p 10001

192.168.1.200:10001> set k1 10

-> Redirected to slot [12706] located at 192.168.1.206:10007

OK

192.168.1.206:10007> set k2 10

-> Redirected to slot [449] located at 192.168.1.200:10001

OK

# 3.7 停止

停止cluster。

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm manager stopcluster --cluster_id 1

meta:

id: 16

code: 0

msg: Success

查看状态。

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm manager showcluster --cluster_id 1

meta:

id: 2

code: 0

msg: Success

state: 8

config:

type: CLUSTER_TYPE_CLUSTER

name: clustertest

master_num: 3

replica: 2

db_usage:

keys: 5

data_size: 20

hosts_details:

- host:

id: 1

port: 10001

ip_addr: 192.168.1.200

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_INIT

slots_str: "[0,5460]"

master_id: 0

db_usage:

keys: 2

data_size: 8

- host:

id: 2

port: 10002

ip_addr: 192.168.1.201

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 3

port: 10003

ip_addr: 192.168.1.202

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 1

db_usage:

keys: 0

data_size: 0

- host:

id: 4

port: 10004

ip_addr: 192.168.1.203

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_INIT

slots_str: "[5461,10921]"

master_id: 0

db_usage:

keys: 2

data_size: 8

- host:

id: 5

port: 10005

ip_addr: 192.168.1.204

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 4

db_usage:

keys: 0

data_size: 0

- host:

id: 6

port: 10006

ip_addr: 192.168.1.205

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 4

db_usage:

keys: 0

data_size: 0

- host:

id: 7

port: 10007

ip_addr: 192.168.1.206

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_MASTER

state: NODE_STATE_INIT

slots_str: "[10922,16383]"

master_id: 0

db_usage:

keys: 1

data_size: 4

- host:

id: 8

port: 10008

ip_addr: 192.168.1.207

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 7

db_usage:

keys: 0

data_size: 0

- host:

id: 9

port: 10009

ip_addr: 192.168.1.208

agent_port: 16384

cport: 0

role: HOST_ROLE_DATA_SLAVE

state: NODE_STATE_INIT

slots_str: ""

master_id: 7

db_usage:

keys: 0

data_size: 0

# 停止manager

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm agent stopmanager --addr 192.168.1.207:16384:16667

meta:

id: 18

code: 0

msg: success

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm agent stopmanager --addr 192.168.1.208:16384:16668

meta:

id: 18

code: 0

msg: success

在192.168.1.200 - 192.168.1.208 上,停止agent。

[root@192-168-1-200 ~]# systemctl stop sudis_agent

# 重启

在192.168.1.200 - 192.168.1.208上,启动agent。

[root@192-168-1-200 ~]# systemctl start sudis_agent

在192.168.1.207上启动manager。

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm agent startmanager --addrs 192.168.1.207:16384 --dirid 1

meta:

id: 18

code: 0

msg: Success

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm agent startmanager --addrs 192.168.1.208:16384 --dirid 1

meta:

id: 18

code: 0

msg: Success

或者执行下列等价的命令。

[root@192-168-1-207 ~]# /opt/sudis/bin/sudis_adm agent startmanager --addrs 192.168.1.207:16384 192.168.1.208:16384

meta:

id: 18

code: 0

msg: Success

重新启动集群。

[root@192-168-1-207 cms]# /opt/sudis/bin/sudis_adm manager startcluster --cluster_id 1

meta:

id: 15

code: 0

msg: Success

# 清理

清理manager配置

rm -rf /opt/sudis/cms

清理sudis程序包

rm -rf /opt/sudis /tmp/sudis